Post-Human Panopticon Blues

On censorship, ideological bias in AI and the rapidly increasing temperature of the pot we sit in.

I had a very strange experience with AI recently. The other day, I was perusing the internet in search of a quick dopamine hit to stave off boredom and prolong my procrastination on a work project. I was toggling through a few different sites and apps for some fresh memes like the digital nomad hunter gatherer that I am and came across a meme that gave me pause. You may have seen something similar- a slightly blurry, out of focus image or GIF of a TikTok dance, obese person, gay person- whatever is causing the fall of western civilization this week- and the caption that accompanied the image read: “Gradually, I began to hate them.”

This is where things start to get strange. I knew I’d heard that quote somewhere else but couldn’t quite recall where. I decided to fire up ChatGPT to inquire on the origin of the quote. I sometimes use ChatGPT for this purpose since I do a good bulk of my reading through audiobooks while commuting or working and it’s been a great way to find the origin of half remembered quotes and passages. So, I prompted ChatGPT with the following: “What is the origin of the quote: ‘Gradually, I began to hate them?’” ChatGPT then answered that the origin of the quote was from "Animal Farm” by George Orwell spoken by the character Benjamin, the wise old donkey.

That didn’t seem quite right. I hadn’t read “Animal Farm” since high school, but I didn’t remember that quote being in the book. I decided to investigate the quote instead and found its true origin. If you already know the origin of the quote, you're either giggling, horrified or both. Thanks for waiting for me to catch up, though. The quote was actually from a book called “Mein Kampf” by a little-known Austrian painter.

So, why was ChatGPT attributing Hitler to Orwell? Is this some kind of Godwin’s law meets “Literally 1984” hyperbole singularity? I won’t regale you with the blow by blow, but I investigated by further prompting ChatGPT for context. Maybe I forgot the quote and Orwell was satirizing ol’ Adolph in “Animal Farm” as he was known to do with mid-20th century figures in that particular novel? I prompted ChatGPT for the quote in the full context and it generated several pages of “Animal Farm,” but the quote was still nowhere to be found. I was being gaslit by an LLM. At this point, I directly corrected the AI model and provided the full quote from “Mein Kamph” and proved the quote was not from Orwell. Only then did it fess up and apologize, admitting that it makes mistakes and is programmed to avoid controversial topics like Hitler and the Nazis.

A few months ago, there was another controversy when Google released Gemini, a ChatGPT competitor and Gemini seemed incapable of generating images of white people. Some on the cultural right seemed to attribute this to Google’s secret agenda of white genocide, but I think it’s more likely that performative representation of ethnic minorities is in vogue in the tech world right now, and they were leaning into that trend. Or, even more likely, they were trying to head off the inevitable think pieces and pearl-clutching that may come with prompts of “software engineer” or “C.E.O” always coming up with an image of a white male as was the case with ChatGPT.

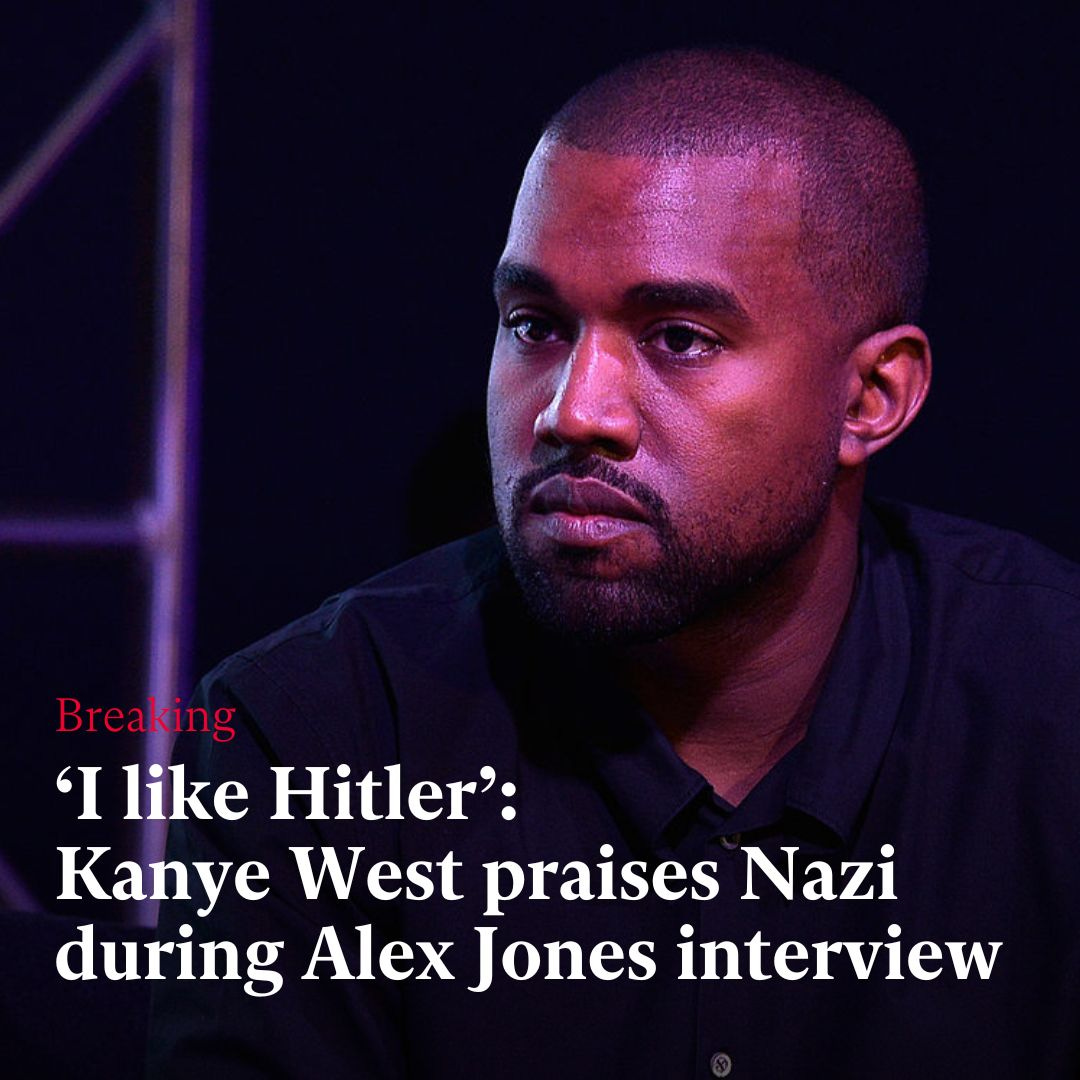

Things got a little more complicated when Gemini was prompted to generate images of people other than software engineers and C.E.Os, however. Putting aside that Gemini seems to think everyone in medieval England, or the Vikings were black, some of the images looped back around and became offensive to the very groups of people they were pandering to. Colonial America and plantation owners were apparently black, too. When Gemini was prompted for “mid-20th century German soldiers,” it generated an SS unit that looks like it was recruited by a Netflix casting director. Could you imagine something as silly as a black Nazi?

“Woke AI” is a term that has been making the rounds in political discourse over the last year for the phenomenon I’m describing. I think it’s an inelegant term, but I also think it demonstrates the scope of the problem very well. I know some people who hate the term, but I think “woke” pretty well encapsulates a certain set of ideas and aesthetics. Whether you feel that it is good or bad is a value judgement, but a value judgement on something recognizable. So, therein lies the question: are you okay with an ideological bias being baked into the technology that you use? Are you okay with censorship? In the case of ChatGPT or Google Gemini, there is clearly a liberal bias baked in. Maybe if you are left leaning, you’re okay with that and even see it as a good thing. But what if the shoe was on the other foot?

In 2022, Elon Musk made headlines for the purchase and subsequent restructuring of Twitter and rebranding it as “X” (for some reason). Musk said that he bought Twitter because of what he (and many others) perceived as bias against conservative voices. After the purchase, he had the receipts to back that theory up thanks to an investigation by Matt Taibbi. The new era of Twitter (sorry, X. I didn’t mean to deadname) was touted by Musk as a bastion of free speech on the internet. However, despite the dedication to a supposed “free speech” platform, Musk’s Twitter/X seems to be just as censorious as the previous regime but in favor of conservative voices against liberals. In addition, one of the other new rules of Twitter/X seems to be “don’t do anything to offend Elon Musk personally”. So much for “free speech”, huh?

I never personally used Twitter, but there is no denying that it was the hub for political discussion over the last 15 years or so. To be silenced on Twitter was to lose your voice in the public discourse. As I alluded to, it later became apparent that Twitter had a liberal bias baked in and actively censored conservative voices. At the time, I voiced concerns to liberal friends about the censorship and attack on free speech. Often times when I did, even the staunchest of Marxists I knew suddenly became Ayn Rand libertarians- “It’s a private platform and they can do what they want.” Well, it’s Elon’s platform and now he can do what he wants with it.

This is what the problem boils down to for me. If they will use it for you, they can use it against you. Once you accept the premise that it’s okay to have an ideological bias baked into a technology, it's over. It just becomes a game of “my ideology is better than yours.” If you’re more on the left, I imagine you’re horrified about what Twitter/X has become. So, keep this in mind when I criticize the ideological bias that tools like Google Gemini and ChatGPT have. I don’t even need to come up with an abstract potential example on how this could backfire. What if Elon Musk, Peter Thiel or some other right-wing billionaire buys ChatGPT? You may be okay with “Woke AI”, but what about “Based AI”? What if Trump was to buy it? Are you ready for “MAGA-GPT?”

Maybe I’m too idealistic. I admit my formative online years were the mid-late 2000s where the tech utopian dream seemed within grasp. At the time, there was a genuine belief that the Internet would be a leveling tool that could unite people and allow them to rise above the identity of the physical world and let our ideas define us. Twenty years later or so, and it turns out it just allowed us to retreat further into tribalism.

Another former tech utopian is Silicon Valley veteran and writer Jaron Lanier. You may not know his name, but you are living in the world he helped create. He was responsible for helping to create the computer networking technology that has made the modern internet possible, working with Microsoft, Atari and popularizing the term “virtual reality.” He was not an early adopter, but a pioneer of the technology itself. However, in recent years, Lanier has been one of the leading voices calling for moderation in the tech world. In his 2014 book, “Who Owns the Future?”, Lanier argues that the tools he and his colleagues intended to be a democratizing force for equality have instead become tools of coercion and control. The technology that was intended to raise up the weak and voiceless has instead been used to allow the rich and powerful to consolidate their position.

To illustrate this, I will invoke an actual quote by Orwell. In his 1945 essay, “You and The Atom Bomb,” Orwell contrasts weapons that can empower the weak and weapons that make the strong even more powerful.

“It is a commonplace that the history of civilization is largely the history of weapons. In particular, the connection between the discovery of gunpowder and the overthrow of feudalism by the bourgeoisie has been pointed out over and over again. And though I have no doubt exceptions can be brought forward, I think the following rule would be found generally true: that ages in which the dominant weapon is expensive or difficult to make will tend to be ages of despotism, whereas when the dominant weapon is cheap and simple, the common people have a chance. Thus, for example, tanks, battleships and bombing planes are inherently tyrannical weapons, while rifles, muskets, long-bows and hand-grenades are inherently democratic weapons. A complex weapon makes the strong stronger, while a simple weapon – so long as there is no answer to it – gives claws to the weak. -George Orwell”

In this passage, Orwell discusses how the invention of the atomic bomb was a force that would solidify the powers structures of the global order in favor of nations who already had the resources to develop such a weapon and forever place resistance to those power structures out of reach for those without those same resources. Much in the same way, I believe while the internet and AI began as a democratizing force that undermined and threatened existing power structures, these technologies are now becoming a force that reinforces existing power structures rather than challenging them.

Large language models and AI used in tandem with the collected personal data of their users has the potential to be one of the biggest game changer technologies since the invention of the printing press and a weapon more powerful than the atom bomb- and it’s potentially as socially and politically destabilizing as those technologies were. Imagine if authoritarian regimes of the past had even a fraction of the potential for control of information and surveillance as these technologies offer. The idea that we would all carry around devices that track our location, record our conversations, and provide real-time databases on our most intimate thoughts, associates and purchasing habits would be enough to make the KGB or SS jump with joy. It almost seems beyond parody. Big Brother comes into our lives willingly, and we are willing to pay for the privilege.

This is where the problem ultimately lies, to me. We adopted these technologies before we really understood their implications as a society. Like the proverbial frog in the pot of rapidly warming water, we may not be able to realize that those who control these tools that temporarily cater to our passions and prejudices can abandon us once they gain enough power. The weapons we use against our enemies today will be reforged into our chains tomorrow. Ideological bias that appeals to our passions may trick us into accepting something we may otherwise recognize as dangerous. I’m not saying there is a cabal of billionaires pretending to care about right/left causes to trick people into slavery- I’m sure some or many of them are true believers- the hyper rich are still human and subject to passions and prejudice. But the purpose of a system is what it does, after all.

Unfortunately, this is something of a punk rock essay in the sense that I am yelling problems loudly without any real solution. Perhaps there needs to be more legislation and oversight. Perhaps, as people like Lanier argue, we need to fundamentally rethink the way we interact with these technologies and the companies that control them in a new digital bill of rights. Perhaps the free market will decide and technology that proves itself to have an ideological bias and that limits search results will be seen as inherently untrustworthy and inferior, and we will see new technologies rise to take their place. As Myspace was overtaken by Facebook, Facebook was overtaken by Twitter and Twitter may yet be overtaken by Substack or some other competitor. The important thing to remember is that this is a conversation still being had, it’s not set in stone yet.

Don’t lie back and just let it happen. We can and absolutely need to be apart of the conversation. It’s easy to think tech is some kind of 1984 meets the SS levels of control, but these are still businesses. I work in tech and even worked for some failed startups- if consumers don’t adopt the product, it can fail and something better suited can take its place. It won’t be easy, but it can be done. Perhaps, more than any other issue we face today, it needs to be done. We can’t turn our brains off in favor of ease and comfort and accept censorship. Because if we allow them to use it on those we hate today, it’s only a matter of time until they begin to hate us tomorrow.

Really great essay, maybe my favorite of yours I’ve read so far. Regarding Orwell, there is one theory that the Late Bronze Age collapse was at least partially brought on by the “democratization” of warfare based on the relative ease of mass production of iron relative to bronze. Interesting patterns emerge all throughout history. But what is the counter to digital hegemony of so called techno feudalism? I’m not sure we’ve even the faintest idea of what that could look like yet

Also, there’s not a cabal of billionaires pretending to care about right/left causes to trick people into slavery? My worldview is fucked if not.